People generally rely on common industry tools to report link-utilization and use this insight to estimate probably the most important aspect of a network: Bandwidth requirement. Let’s revisit this approach and avoid the holes to make a better informed decision.

I often come across the below thought:

The links in our network are under 40% utilized. We do not need more bandwidth.

Common but misleading

Even though this statement sounds correct, there is more than meets the eye. Is it possible that you need more bandwidth even though the reported link utilization is under 40%? Certainly, yes. In fact, with the deep penetration of all flash arrays, this phenomenon is become more and more prevalent. I have seen this behavior with multiple deployments, ranging from small to large size storage fabrics. This is a vast subject but today I will focus on the not-so-obvious difference between link-utilization and bandwidth requirement.

It depends on

how and where

link-utilization is calculated and reported

What you see on a NMS

Ingress and egress bytes on a network port are calculated by the switching ASICs. Specific hardware counters are incremented at every packet (or byte or bit, depending upon the architecture). This happens at microseconds granularity. Multiple layers get involved before these counters are made available to an end-user. Counters are exported by the ASICs to the switch operating system every few seconds (10 seconds is a common interval). The switch operating system exports these counters to a remote NMS every few minutes (SNMP walk every 5 minutes is a common deployment).

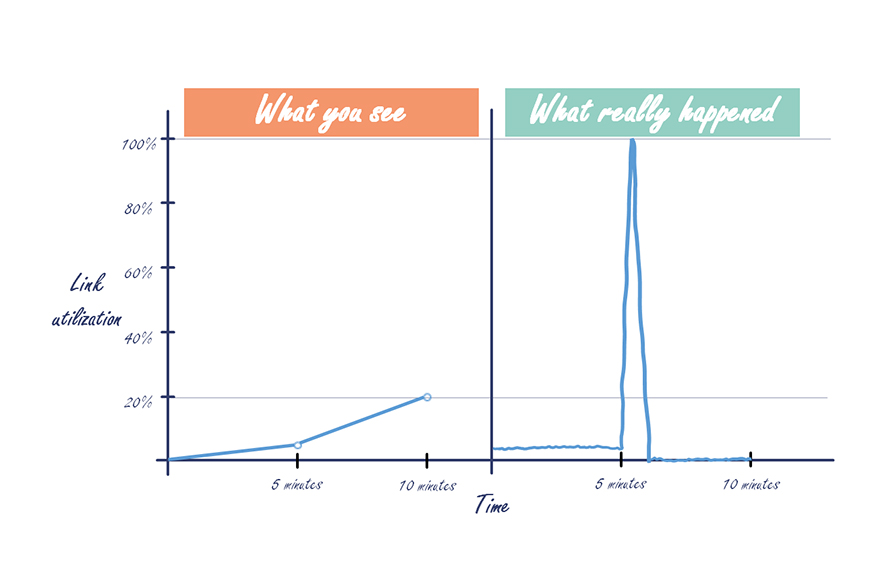

NMS report link utilization in granularity of minutes. It lacks the visibility into any burst of traffic that might have happened in between.

Let’s understand this with the help of an example of 8G Fibre Channel port (Tip: 8G FC port operates at 6.7 Gbps).

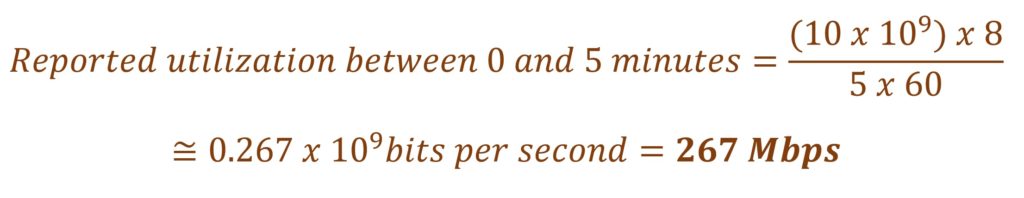

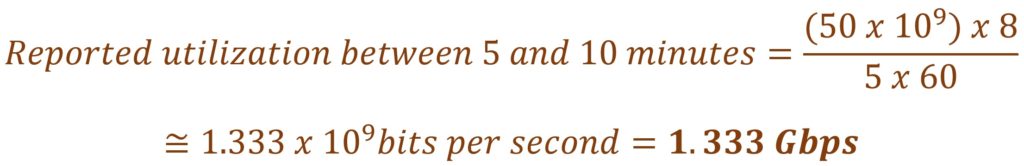

Consider three polling cycles at time 0, 5 and 10 minutes. Let’s assume the reported values are 0, 10 billion and 60 billion bytes respectively. The NMS takes the delta in the reported bytes and divide it by the polling duration to calculate the link utilization. At time = 5 minutes, delta is 10 billion bytes. This results in 267 Mbps.

(Multiply by 8 to convert to bits. Convert 5 minutes into seconds by dividing by 5 x 60)

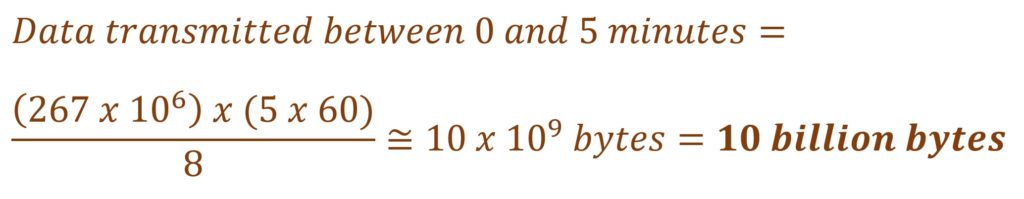

At time = 10 minutes, delta is 50 billion bytes (60 minus 10). This results in 1, 333 Mbps.

(Multiply by 8 to convert to bits. Convert 5 minutes into seconds by dividing by 5 x 60)

It is a common industry practice to use this output on a NMS to report link-utilization. In this example, the link utilization is 1.3 Gbps, which is just under 20% of the 6.7 Gbps capacity of the 8G FC link. Look good?

What really happens on a network port

A network port transmits and receives traffic at extremely low granularity. It is possible that the application traffic profile is bursty in nature. For few microseconds, a port may be transmitting at full capacity followed by a period of minimal activity.

Let’s continue with the previous example of 8G Fibre Channel port operating at 6.7 Gbps. The traffic starts at time = 0 minutes, at a constant throughput of 267 Mbps for next 5 minutes. The network port exports the metric at the end of 5 minutes. This shows up as 10 billion bytes in the network management application.

(Divide by 8 to convert to bytes. Convert 5 minutes into seconds by multiplying by 5 x 60)

(Divide by 8 to convert to bytes. Convert 5 minutes into seconds by multiplying by 5 x 60)

All is well till now.

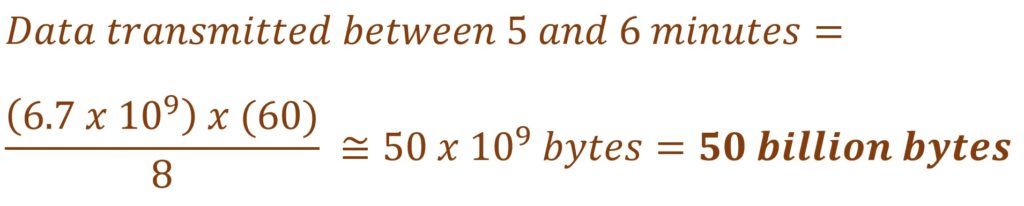

But at time = 5 minutes, the application causes a traffic burst resulting in line rate utilization for next 60 seconds. At 6.7 Gbps, a port can transmit 50 billion bytes in approximately 60 seconds.

(Divide by 8 to convert to bytes. Convert 1 minute into seconds by multiplying by 60)

Following this, the port remains idle for the next 4 minutes. At the end of 10 minutes, the NMS still sees 60 billion bytes total with no visibility into the traffic burst within last 4 minutes.

The reported link-utilization from a NMS clearly missed the instantaneous high bandwidth requirement on a network port.

One can argue that measuring the link-utilization at network ports can give more realistic picture but, first, industry does not do it that way to keep it operationally simple and second, microseconds is still an extremely low granularity to report any metric.

Summary

Link utilization is commonly reported from a Network Management System where data is summarized over minutes. This may be far from the reality on a network port where traffic bursts can potentially overflow the available bandwidth on the link at microsecond granularity. This breaks the correlation between the reported link-utilization and bandwidth requirement. Be aware of this not-so-obvious difference.

What it means for you? Always get as much bandwidth as you can because when you need more bandwidth, you need more bandwidth. There is no real any other alternative.