Last Updated on December 16, 2019 by Paresh Gupta

Recently, a customer reported an issue about the traffic utilization graph of a network port. The graph showed an extremely large spike after clearing counters on the network switch. Continue reading for a detailed answer of the unexpected spike on the graph.

The short answer

Have you heard of scientific problems related to the size of a computer counter? Does Year 2038 problem or Y2K problem ring a bell? Have you been part of conversations when someone tried to make you believe that a number will soon reach its limit resulting in a catastrophic event? Computers in DoD will reach the judgement day to auto-fire all the nuclear warheads and the human civilization will end? You are seeing a smaller and realistic impact on your traffic monitoring graphs after clearing the counters on the monitored network device. The world is not ending. Just a minor shake in the form of spikes or dips in the graph.

That was the short answer and a hint to guess the complete explanation.

Detailed and long answer

Most of the Network Monitoring Systems (NMS or front-end or graphing engines) are designed to be agnostic to the data source.

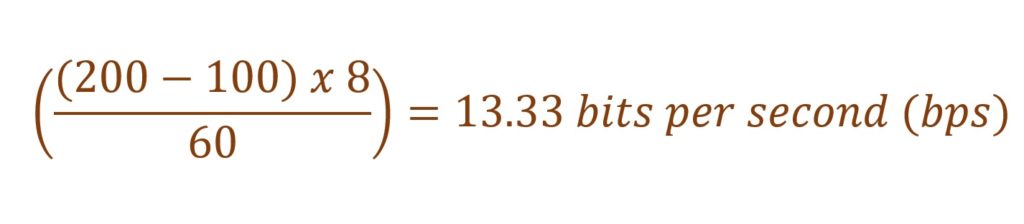

These applications receive absolute metrics. Enhanced metrics, like Rate (in Mbps or Gbps), are calculated indirectly by taking a differential from two consecutive data points and dividing by the time difference. For example:

1st data point: Time = 01:00, bytes_tx = 100

2nd data point: Time = 01:01, bytes_tx = 200

A sender sends the counters in the above format. A receiver calculates rate by: (Multiply by 8 to convert bytes to bit and divide by 60 for the number of seconds in a minute)

(Multiply by 8 to convert bytes to bit and divide by 60 for the number of seconds in a minute)

This is how most of the NMS work today.

A sender does not send change in value. Absolute numbers are sent, and the receiver is expected to take a differential.

All good till now? It is time to test the limits.

Metrics like bytes_in, bytes_out, etc. are exported via counters with pre-defined size. Most of the implementations today use 64-bit counters. Irrespective of the size, a counter will eventually reach the maximum value. As an academic exercise, you may want to know: ‘How long it takes for a 64-bit counter to reach the maximum value at 100 Gbps’. I will leave that question for you because it is not relevant to this topic. What is relevant, is to understand what happens when the counter reaches maximum value? In simple words, the counter rolls over and start from 0.

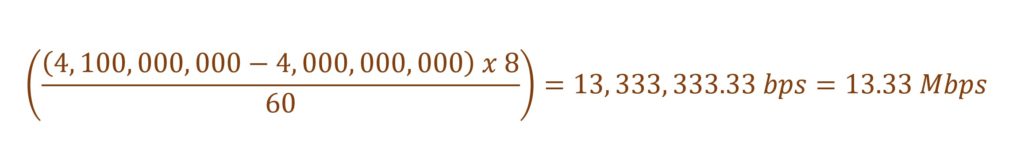

For illustration, let’s assume that the counter size is 32-bit (4,294,967,296):

1st data point: Time = 01:00, bytes_tx = 4,000,000,000

2nd data point: Time = 01:01, bytes_tx = 4,100,000,000

A sender sends the counters in the above format. The receiver applies the same logic as before:

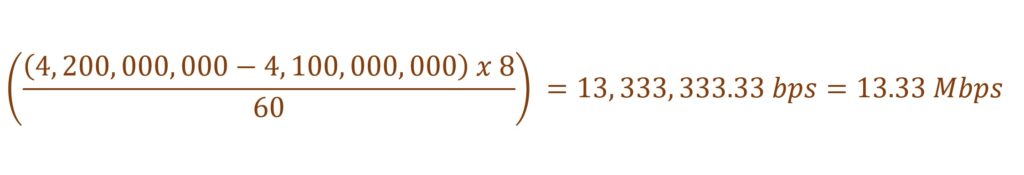

If the rate remains constant, the sender will send a 3rd data point with value 4,200,000,000.

If the rate remains constant, the sender will send a 3rd data point with value 4,200,000,000.

3rd data point: Time = 01:02, bytes_tx = 4,200,000,000

Applying the same logic as above, the data rate will be: If we plot a graph, expect a straight-line indicating data rate at 13.33 Mbps.

If we plot a graph, expect a straight-line indicating data rate at 13.33 Mbps.

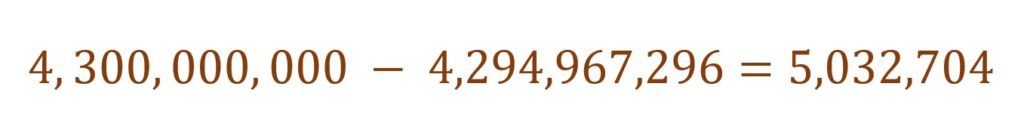

Let’s look at the 4th data point. Assuming the rate remains constant, the sender should send 4,300,000,000.

But wait!

…

A 32-bit counter can’t go beyond 4,294,967,296.

Hence, the sender:

- Packs within 4,294,967,296 whatever is possible and

- Rolls over to start from zero and

- Packs rest of the numbers which could not be packed before the roll-over

The final value will be: Hence, the 4th data point will be:

Hence, the 4th data point will be:

4th data point: Time = 01:03, bytes_tx = 5,032,704

The sender notices that the later value is less than the previous values. It realizes that the counter must have rolled over so it needs to handle it properly, as shown below: Essentially, the sender is programmed to roll over the counters when the maximum limit is reached. The receiver is programmed to modify the differential logic when the later value is less than the previous value.

Essentially, the sender is programmed to roll over the counters when the maximum limit is reached. The receiver is programmed to modify the differential logic when the later value is less than the previous value.

So what does the counter roll-over has anything to do with clearing the counters?

Now that we have the background, let’s understand the output when the counters are manually cleared on the sender (network device/switch/router). Just to illustrate, I will pick numbers in the middle of the 32-bit range.

1st data point: Time = 01:00, bytes_tx = 2,000,000,000

2nd data point: Time = 01:01, bytes_tx = 2,100,000,000

3rd data point: Time = 01:02, bytes_tx = 2,200,000,000

4th data point: Time = 01:03, bytes_tx = 2,300,000,000

Following the logic explained above, these data points will show a graph with a straight line of 13.33 Mbps.

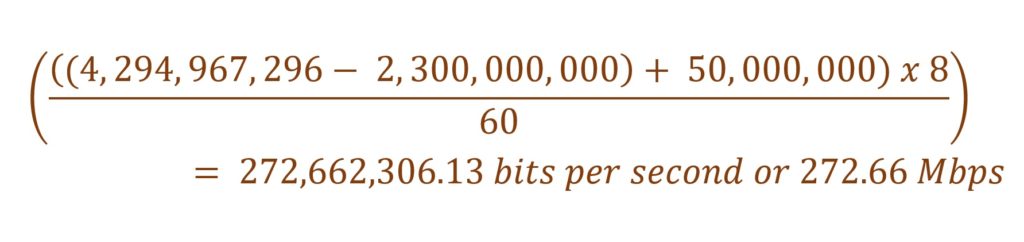

Let’s assume that counters are cleared on the sender between 1:03 and 1:04. The byte_tx will become 0. If 50,000,000 bytes were sent after clearing the counters, the 5th data point will be:

5th data point: Time = 01:04, bytes_tx = 50,000,000

The receiver notices that the value at 1:04 is smaller than the value at 1:03. So it applies the special logic to calculate the rate:

The graph on the NMS will be a straight line at 13.33 Mbps just with the exception at 1:04 showing a spike to 272.66 Mbps. In reality, you may see a dip, spike or no change at all, depending upon the value and size of the counter.

The graph on the NMS will be a straight line at 13.33 Mbps just with the exception at 1:04 showing a spike to 272.66 Mbps. In reality, you may see a dip, spike or no change at all, depending upon the value and size of the counter.

Summary

Smaller values later in the timescale may be treated as a counter roll-over scenario. Manual clearing of counters on the back-end network device may un-intentionally simulate this condition.

You can argue that this is a bug. I am more inclined towards the idea that this is a side-effect of keeping the implementations of sender and receiver separate from each other. Can this behavior be changed? Yes, but programmers got better things to do. I am sure newer systems are being designed better but this is a corner case and every additional check makes the implementation more complex/limited.

So what is the recommendation?

My recommendation is to avoid clearing the counters on the sender, which can be a network switch or router. If you must do, ignore any expected graphs at that time.